Reverse-engineering cognitive and neural representations

The Cognitive and Neural Computation Lab (CNCL) aims to understand how our minds and brains transform raw sensory inputs into rich, discrete structures that we can think about, plan with, and manipulate. To address this, they take a primarily computational approach for a distinctly integrative program by building models of seeing and thinking that explain phenomena spanning the cognitive and neural levels. These computational theories synthesize an especially broad technical toolkit, including probabilistic programming, causal generative models, nonlinear dynamics and control, approximate Bayesian inference, and artificial neural networks. We test these models in objective, performance-based psychophysical experiments in humans. We also test these models in neural data from non-human primate experiments via experimental collaborators and in human imaging experiments that the group designs and executes. With this multilevel program and multifaceted methodology, they are developing a computational account of the mind and brain's representations of the physical world with unprecedented depth and empirical force. These include formal specifications of perceptual representations, mechanisms by which these representations are selectively deployed and by which they are cast into memory, and how these representations are implemented in multi-area neural algorithms and dynamics across the brain - all in precise engineering terms.

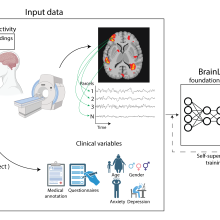

Methods

Topics

Biography

Ilker Yildirim received his Bachelor's and Master's degrees in 2007 and 2009 from Bogazici University, Istanbul, in Computer Science, and his PhD in 2014 from the University of Rochester in Brain and Cognitive Sciences. After a postdoc at MIT, he started his lab at Yale University in 2019 as an Assistant Professor of Psychology. His partner, Meltem, is a physician at Yale Health. They have two children, Birol and Nimet, who are skilled negotiators.